Web Scraping

All nodes are only available on the hosted n8n version and require you to install CustomJS community package. See Installation.

The CustomJS Scraper node for n8n automatically handles complex, dynamic web scraping—even sites requiring user interaction (clicking, typing) or JavaScript rendering.

Scraper node workflow

- Start your workflow with a Start node using a fixed website URL, or pull URLs dynamically from another source such as a Google Sheets node.

- Add the Scraper (customJS) node

- Configure the Website URL to target the page you want to scrape.

- Define user actions to simulate interaction with the page, for example:

click('#button') type('#search', 'my query') wait(2000) - Select the output type:

- Raw HTML: to extract the website’s source for further parsing.

- Screenshot (PNG): to capture a visual snapshot of the page.

- Process the scraped data:

- If you extracted HTML, connect it to the HTML Extract node to parse structured elements.

- If you generated a screenshot, save it to storage or send it through a notification (e.g., SMTP or Slack node).

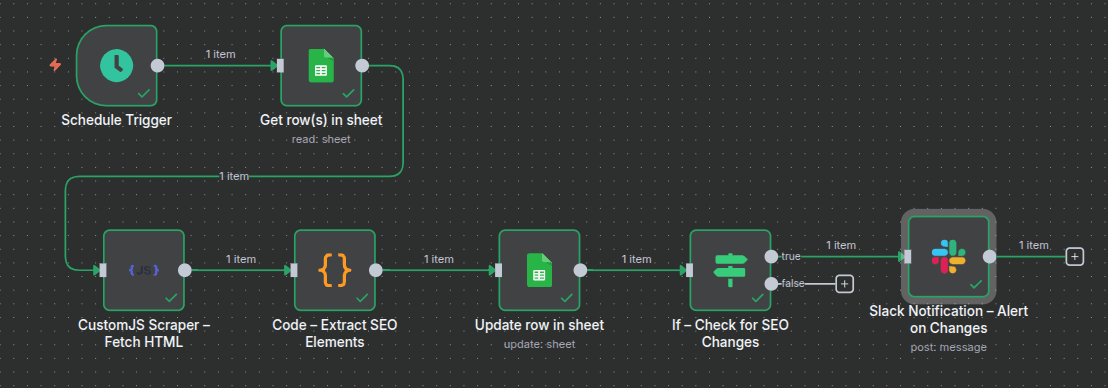

n8n workflow template: Monitor competitor SEO changes with CustomJS scraper, Google Sheets & Slack alerts

You need to setup Slack and Google Sheets credentials in n8n to use this template.

This workflow template:

- Gets URLs of competitor websites from Google Sheets.

- Scrapes websites.

- Extracts HTML or screenshots you can use in the next steps of your workflow.